A review of robust and generalization of reinforcement learning

link: images.tgz

1. Introduction

- Silver D, Hubert T, Schrittwieser J, et al. Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm.[J]. arXiv: Artificial Intelligence, 2017.

- Kober J, Bagnell J A, Peters J, et al. Reinforcement learning in robotics: A survey[J]. The International Journal of Robotics Research, 2013, 32(11): 1238-1274.

- Hu Y, Da Q, Zeng A, et al. Reinforcement Learning to Rank in E-Commerce Search Engine: Formalization, Analysis, and Application[C]. knowledge discovery and data mining, 2018: 368-377.

- Zoph B, Vasudevan V K, Shlens J, et al. Learning Transferable Architectures for Scalable Image Recognition[C]. computer vision and pattern recognition, 2018: 8697-8710.

- Bello I, Pham H, Le Q V, et al. Neural Combinatorial Optimization with Reinforcement Learning[C]. international conference on learning representations, 2017.

- Henderson P A, Islam R, Pineau J, et al. Deep Reinforcement Learning that Matters[C]. national conference on artificial intelligence, 2018: 3207-3214.

- Lanctot M, Zambaldi V, Gruslys A, et al. A Unified Game-Theoretic Approach to Multiagent Reinforcement Learning[C]. neural information processing systems, 2017: 4190-4203.

- Zhang A, Ballas N, Pineau J, et al. A Dissection of Overfitting and Generalization in Continuous Reinforcement Learning.[J]. arXiv: Learning, 2018.

- Zhang C, Bengio S, Hardt M, et al. Understanding deep learning requires rethinking generalization[C]. international conference on learning representations, 2017.

- Kawaguchi K, Kaelbling L P, Bengio Y, et al. Generalization in Deep Learning[J]. arXiv: Machine Learning, 2017.

- Madry A, Makelov A, Schmidt L, et al. Towards Deep Learning Models Resistant to Adversarial Attacks[C]. international conference on learning representations, 2018.

2. Preliminaries

2.1. Adversarial learning

其中$|.|$为任意满足定义的norm。特定条件下对抗训练的弱收敛性目前已经得到了理论证明:对于数据集中的所有样本,存在一个$\epsilon$使得其周围半径为$\epsilon$的norm ball中不存在对抗样本。相比于robust optimization,分布鲁棒性优化(distributional robust optimization)通过松弛样本背后的概率分布构造不确定性集合,以下两种优化目标互为对偶

其中$W_c(.,.)$为Wasserstein距离,$c(.,.): \mathbb{R}^{n} \times \mathbb{R}^{n} \rightarrow [0, + \infty)$表示Wasserstein距离定义中概率测度支撑集上的transportation cost,是一个严格凸函数。不难看出,distributional robust optimization相当于robust optimization的松弛版本,在保障可验证的对抗样本安全边界的前提下,往往可以得到比后者更好的训练稳定性与收敛性。

2.2. RL in continuous control

强化学习的最终目标,是在控制agent与环境交互的过程中学习,从而最大化期望总收益。为了求解Markov随机过程中的收益最大化问题,最大期望总收益可以分解为Bellman equation的形式。另$V \in \mathbb{R}^{|\mathcal{S}|}$为价值函数,$r \in {\mathbb{R}^{|\mathcal{S}|}}$为奖励函数,$\mathcal{P}^{\pi} \in \mathbb{R}^{|\mathcal{S}|} \times \mathbb{R}^{|\mathcal{S}|}$表示在环境中执行策略$\pi$带来的转移概率,标准Bellman operator $\mathcal{T}$是$\ell_{\infty}$ norm下的$\gamma$-压缩映射:

由于Bellman方程是一个线性递推式,可以看出求解最优策略$\pi$与求解最有价值$V$是等价的。因此按照直接求解目标的不同,强化学习方法可以大致分为基于价值(value-based)、基于策略(policy-based)、以及二者的结合actor-critic三类。

2.2.1. Value-based RL

基于价值求解RL问题,最具代表性的算法是Q-learning,令 $Q(s,a):=r(s,a)+\mathbb{E}_{s’,a’\sim\pi}[\sum_{t=1}^{\infty}\gamma^{t}r(s’,a’)]$ 为状态-价值函数,Q-learning的迭代形式如下:

由于Q-learning实际学习的目标是当前的$Q$函数所代表的greedy策略,因而是一种off-policy的算法,即当前正在学习优化的策略可以与采样策略不同。大部分基于价值的DRL算法都沿用了Q-learning的框架,深度学习兴起之后,DQN开创性地使用神经网络表示$Q$函数,训练出的RL模型可以在Atari游戏任务上达到人类水平。后续的Dueling DQN、prioritized replay、Rainbow DQN、Ape-X等方法则从不同的角度进一步提升了DQN的性能。

2.2.2. Policy-based RL

相较于value-based RL,policy-based RL方法在过去很长一段时间的研究中都没有受到足够的重视,其代表性方法策略梯度(policy gradient)长期被认为是一种方差极大难以收敛的算法。主要原因有有二:第一,策略梯度方法直接将期望总收益作为目标进行优化,使得该算法只能采用on-policy训练,这意味着策略梯度方法无法很好地应用于更复杂的RL问题中;第二,当与神经网络结合时,策略梯度训练过程中无法像value-based方法一样从理论上保证策略提升的单调性。

为了解决上述问题,CPI提出了一种在原策略分布的一个有限范围内进行策略迭代,得到了一种较保守的策略迭代方式,然而该方法仍无法解决on-policy训练的问题。相比之下,TRPO采用重要性采样(importance sampling)来解耦合模型的训练与采样过程,并理论证明了使用了importance sampling后策略提升的下界:

其中$\pi_{\theta}$为当前优化的策略,$\pi’$为采样使用的策略,$J(\pi)=\mathbb{E}_{\pi}[\sum_{t=0}^{\infty}\gamma^{t}r_{t}]$为策略$\pi$下的期望总收益,$A:\mathcal{S} \times \mathcal{A} \rightarrow \mathbb{R}$为优势函数(advantage function)。基于该下界不难看出,在限定$D_{KL}(\pi’||\pi_{\theta})$范围内进行策略迭代可以保证策略的单调性提升,目标函数可以写作

为了求解限定KL范围内的策略提升问题,TRPO引入了自然梯度下降,通过共轭梯度法求解自然梯度,配合步长的指数线性搜索来保证迭代严格满足理论性质;ACKTR采用克罗内克积(Kronecker product)来高效地近似Fisher信息矩阵,从而降低了TRPO的复杂度;PPO提出了两种替代损失函数,采用TRPO的一阶近似来进一步保障训练的稳定与高效。

2.2.3. Actor-critic

placeholder

2.3. Adversarial RL

- Pinto L, Davidson J, Sukthankar R, et al. Robust adversarial reinforcement learning[C]. international conference on machine learning, 2017: 2817-2826.

- Tamar A, Glassner Y, Mannor S, et al. Optimizing the CVaR via sampling[C]. national conference on artificial intelligence, 2015: 2993-2999.

- Tessler C, Efroni Y, Mannor S, et al. Action Robust Reinforcement Learning and Applications in Continuous Control[C]. international conference on machine learning, 2019: 6215-6224.

- Abdullah M A, Ren H, Bouammar H, et al. Wasserstein Robust Reinforcement Learning.[J]. arXiv: Learning, 2019.

- Mankowitz D J, Levine N, Jeong R, et al. Robust Reinforcement Learning for Continuous Control with Model Misspecification.[J]. arXiv: Learning, 2019.

- Pattanaik A, Tang Z, Liu S, et al. Robust Deep Reinforcement Learning with Adversarial Attacks[J]. adaptive agents and multi-agents systems, 2018: 2040-2042.

- Packer C, Gao K, Kos J, et al. Assessing Generalization in Deep Reinforcement Learning[J]. arXiv: Learning, 2018.

- Rajeswaran A, Ghotra S, Ravindran B, et al. EPOpt: Learning Robust Neural Network Policies Using Model Ensembles[J]. arXiv: Learning, 2016.

3. 思路推进

- Summarize the drawback of existing works

- opponent modeling: training instability, as reported in the MARL paper

- adversarial off-policy sampling: learning with a bad sampling policy may result in slow convergence and training instability

- SL regularizers: lack of interpretability

- we show that incorporating a robustness-related regularizer in the value estimation can contribute to better robustness while maintain a good performance in training environments at the same time. (Propose the framework here) Why?

1) Does not need to change the environment during training. This makes the algoritm more applicable to many real world environments which has no access to the environment parameters;

2) Similar to the intuition of the generalization in SL, the optima with high curvatures on the loss surface often correspond to bad generalization ability of the models, because a small pertubation in the parameter space can result in severe performance degration. An appropriately defined regularizer can prevent the model from overfitting in sharp minima. - Based on the intuition we propose to use a regularizer in the Bellman policy iteration scheme. The regularizer should punish the states that has high value estimations but are vulnerable to small perturbations.

- Define each concept mathematically:

- Define Q_adv first, with perturbations subjected to a norm constraint. Thus Q_adv(s, a) can be represented implicitly using adversarial attack of Q(s, a) w.r.t. s

- Define \pi^ and \pi_adv^ as the optimal improvement of the current policy \pi in a infinitely small norm ball

- Compute the closed-form solution for the improvement of \pi under Q (probably using a Lemma???)

- Define \phi(\pi) as the discrepancy between the adversarial optimal policy and the optimal policy in the \epsilon norm ball, with the discrepancy measured by KL divergence

- Propose the algorithm under the Q-learning framework

- Assumptions and proof for the convergence of the Q-learning algorithm under a tabular setting (probably using a Theorem???)

- Propose the algorithm under the TD3 framework

- Discussion about the intuition behind the approach (better with figures and figures and figures…)

References:

- Azar M G, Gómez V, Kappen H J. Dynamic policy programming[J]. Journal of Machine Learning Research, 2012, 13(Nov): 3207-3245.

- Geist M, Scherrer B, Pietquin O. A Theory of Regularized Markov Decision Processes[J]. arXiv preprint arXiv:1901.11275, 2019.

4. 实验结果

训练过程:

- 正则化函数不会对训练过程与结果带来非常显著的影响,换言之,正则函数对模型的正则化提升不需要以降低模型在训练环境上的表现为代价

- 我们发现较小的$\epsilon$就已经足以达到满意的训练效果,虽然更大的$\epsilon$可以为模型带来更强的正则化,但过大的$\epsilon$不一定总是能够带来正面的实验效果,在一些复杂的实验环境,如Humanoid-v2下,过大的$\epsilon$可能会为训练带来带来更多的不稳定性

- should be waiting for the new emsemble experimental results在多个不同的环境中集成训练往往会带来训练的不稳定,且会降低默认训练环境下的模型表现,这种现象在复杂环境中体现的更加明显,相比于集成训练,我们的方法可以更好地保证训练的稳定性与较好的训练结果

测试环境:

- 在所有的实验环境上,我们提出的SIR-TD3无论是在NT还是HV设定下都表现出比原本的TD3算法更强的泛化能力,尤其当测试环境与训练环境相差较大时,正则化函数为模型带来的泛化能力提升也更加明显

- 使用更大的扰动区间$\epsilon$有时可以为模型带来更好的正则化效果,但$\epsilon$大小与模型泛化能力之间的关系并不总是单调提升的。总体来说,我们发现较小的$\epsilon$就已经足以带来较好的正则化效果。

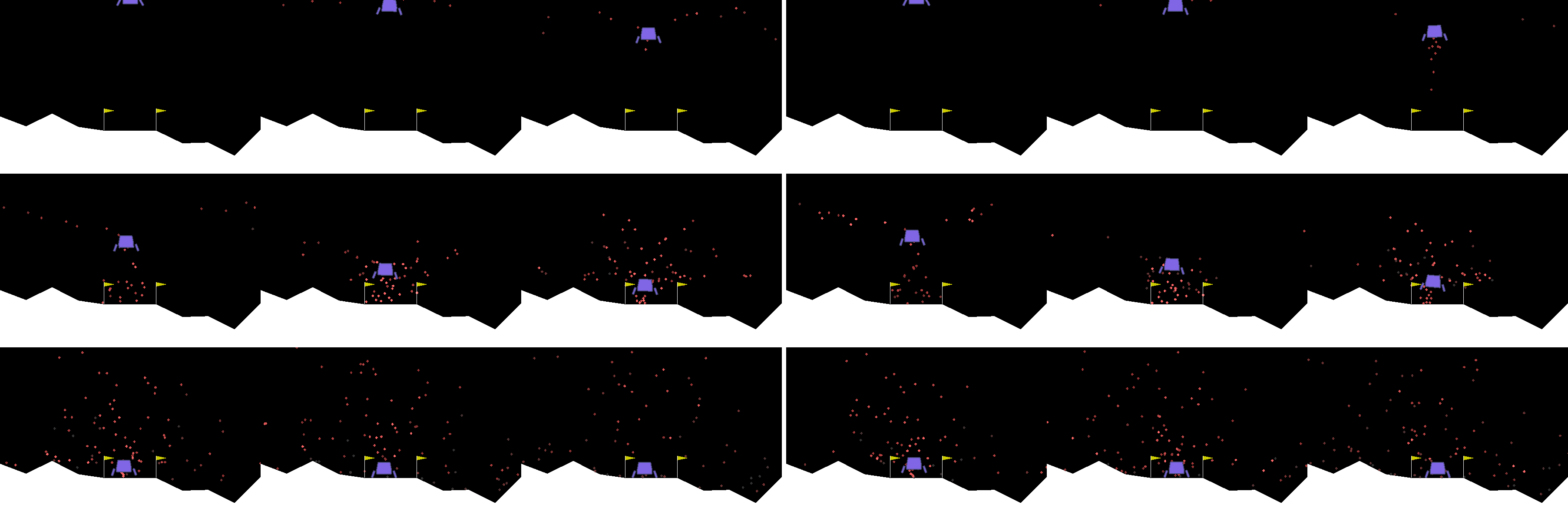

Empirical evidence:

- 在LunarLanderContinuous-v2环境上,我们发现模型学习到的策略更加具有可解释性。在该环境下,飞船距离着陆点较远时迅速下降,靠近着陆点时用较大的喷射力度稳定平衡就可以获得较高的reward,但这种策略同时也会在重力加速度发生变化时使得飞船更容易坠毁,遗憾的是,大部分无模型强化学习最终都会收敛到这种对环境变化缺乏泛化能力的策略。从图中可以看出,在TD3算法中加入我们提出的正则化函数后,模型前期的下降速度会比原始版本的TD3算法要慢,也就是所,在正则化函数的效果下,模型确实学习到了更加符合人类直觉的、更具有可解释性的、具有更强泛化能力的策略

4.1. Training

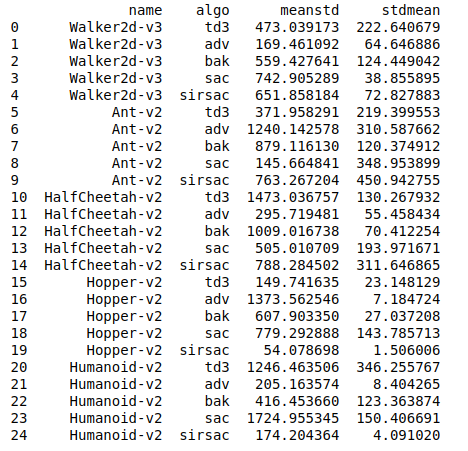

以下实验结果小数点后四舍五入只保留整数部分,符号±后面的数字是half deviation

| Algo | Lunar | Walker2d | HalfCheetah | Hopper | Ant | Humanoid | |

|---|---|---|---|---|---|---|---|

| TD3 | 282±8 | 5089±65 | 11581±62 | 3529±163 | 3541±78 | 6259±251 | |

| SIR-TD3 | 288±9 | 4007±12 | 11189±57 | 3377±31 | 5806±48 | 6489±12 | |

| Ensemble | 275±8 | 4759±480 | 9282±77 | 3518±188 | 5653±172 | 6548±417 | |

| Ensemble+SIR | 276±10 | 4578±19 | 11639±60 | 3534±22 | 5923±40 | 5976±280 | |

| SAC | 284±9 | 5496±51 | 16701±47 | 3754±6 | 6875±414 | 6486±4 | |

| SIR-SAC | 286±9 | 5385±16 | 16154±73 | 3550±1 | 7171±31 | 5997±5 |

Note that it is unfair to directly compare the performance between the models with ensemble training and those without ensemble training, since the formers have never experienced any of the testing environments except the unmodified one used for training simulations.

Conclusions:

- The models with SIR have much lower variance than those without an SIR. The phenomenon is more significant in complex environments such as Walker2d-v3, Hopper-v2, and Humanoid-v2, where the SIR-TD3 models have lower variance in magnitude than their TD3 counterparts.

- Compared with our proposed SIR models, ensemble training can significantly increase the model variance in training environments. The result can be concluded by comparing the performance standard deviation of the TD3 models and the Ensemble models, where the models with ensemble training have higher variance in magnitude than the TD3 models. Similarly, in most cases, the SIR-TD3 models with ensemble training also show higher variance of performance than the SIR-TD3 models.

This implies that our SIR is a better solution for RL generalization than the ensemble training especially when model stability is required.

4.2. Integral area under curves

| epsilon | Lunar | Walker2d | HalfCheetah | Hopper | Ant | Humanoid | |

|---|---|---|---|---|---|---|---|

| 0.00 NT | 29.76 | 2781.17 | 2487.13 | 4389.07 | 1708.02 | 3136.36 | |

| 0.05 NT | 45.69 | 3541.74 | 2695.17 | 4458.08 | 2551.94 | 3015.09 | |

| 0.10 NT | 54.33 | 3248.23 | 2585.83 | 4709.54 | 1778.62 | 3048.04 | |

| 0.00 HV | 245.56 | 968.47 | 8705.90 | 920.92 | 3556.13 | 4389.07 | |

| 0.05 HV | 255.77 | 1797.55 | 8880.63 | 1096.20 | 5495.18 | 4458.08 | |

| 0.10 HV | 264.84 | 1638.21 | 8751.48 | 1005.73 | 3846.90 | 4709.54 |

4.3. Comparison for ensemble training methods

| Algo | Lunar | Walker2d | HalfCheetah | Hopper | Ant | Humanoid | |

|---|---|---|---|---|---|---|---|

| Ens HV | 270.51 | 4526.66 | 8979.61 | 3070.99 | 5511.94 | 6290.51 | |

| Ens+SIR HV | 271.16 | 4564.77 | 11322.53 | 3472.28 | 5701.74 | 5793.81 | |

| Ens NT | 28.95 | 1616.33 | 1245.57 | 449.80 | 709.64 | 1764.21 | |

| Ens+SIR NT | 17.04 | 1810.70 | 1267.14 | 654.71 | 2289.40 | 2992.88 |

4.4. Standard variations on 10 seeds